I've noticed a strange thing happening as I work alongside AI tools. I am feeling two very different emotions at the same time and struggling to harmonize them. On one hand I see the immediate benefits of using the AI tools now at my disposal and feel a growing FoMO (Fear of Missing Out) that I might fall behind if I do not master every new wave of capabilities that AI brings to my work.

On the other hand, I feel a growing anxiety over the significant professional, privacy, societal and personal implications of what AI is now able to do. In the same moment I'm drawn to the latest tool like a moth to a flame, but when I get to close I flutter away as my wings get singed.

I see plenty of people talking with abandon about the benefits and others talking with equal emotion about the dangers. What I'm not seeing is people talking about the impact of the interplay between these two on the average person. I believe that the greatest danger is not in the extremes but in the middle. Remember that most people don't live in an extreme position. Most people are stuck somewhere in the middle trying to make sense of it all. That is normal. But what is not normal is to have such a heightened level of euphoria and alarm extending over a long period of time without an ability to come to resolution.

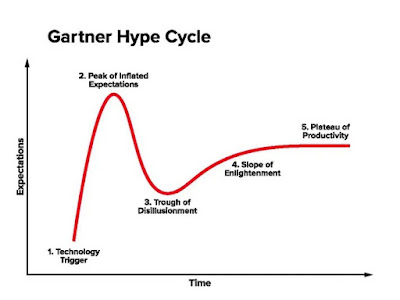

What I have noticed in myself is this: Because of the deep level of unknown about the implications of AI, I vacillate between excitement and dread on an hour by hour, day by day cycle. Now this roller coaster is not novel. The consultancy Gartner has developed what they call the Hype Cycle to describe this process that humans go through when adopting new technology. What is novel is the outcome. The Hype Cycle assumes that there will be a process of education, utilization, rationalization and finally adoption. That is a very linear process that takes a person from discovery to integration. But what I'm describing is a situation where the technology is changing so fast and its implications are so unknown that we can never truly go through a process like Gartner describes. We are stuck being triggered, experiencing inflated expectations and then being disillusioned over and over again without the ability to climb out.

To understand this crazy cycle, it is important to take a moment to dive into the FoMO phenomenon. In the NIH's overview of FoMO, written by Mayank Gupta and Aditya Sharma, they describe this phenomenon that appeared on our radar in the early 2000's and made it into the dictionary as an established concept only a decade later.

I also noted the impact on decision making and the inability to come to resolution and move forward: "FoMO broadcasts more options than can be pursued, impacting an individual's decision making in personal and professional settings. It may impair an individual’s ability to make commitments and agreements, as one feels inclined to keep options open and not risk losing out on an important, potentially life-changing experience which may offer greater meaning and personal gratification."

Essentially, our hyper-connected world has created a constant sense that what I'm doing this moment is not enough. The comparisons to the Jones' next store and the greener grass has gone from a weekend pastime for those who want to impress their neighbors to a minute-by-minute fixation.

And the disruption of AI is simply adding jet fuel to that fire. That jet fuel is epitomized in the phrase I hear often, "AI won't replace you, someone who uses AI will replace you." While this is supposed to be reassuring, it is actually does greater harm because now the enemy is not the robot but the human who is using the robot to make you irrelevant. It takes FoMO and gives it an AI boost.

A good indicator of where people are with AI in their work can be found in the Psychological Association's "2024 Work in America" survey. They highlight a few key insights: "More than a third of workers (35%) are intentionally using AI monthly or more often to assist with their work, yet only 18% reported knowing that their employer has an official policy about acceptable uses of AI. Half of workers (50%) said their employer has no such policy, and close to one third (32%) were unsure. Some employees (41%) worry that AI will eventually make some or all of their job duties obsolete in the future."

What these stats highlight for me is a workforce, representing a population, living in the ambiguity of a highly disruptive moment and not wanting to be left out but unsure about the implications of diving in. We can't live there for long. We need governments, social sector partners, tech companies, and thought leaders to show people a way out of the endless loop that this moment has created.

As a writer, innovator and technology implementer, I have spent several years writing about the opportunities and challenges of integrating AI into our lives, work and societies. You can find all my writing here. Many are writing, advocating and predicting. But I fear that we are hearing most from the extreme edges and little help is coming to the middle. I wonder if we could stop trying to convince people of a position for a moment, listen to them where they are and then help them find solutions and coping mechanisms. Until we help the moth find the light but keep from getting burned, we will have growing anxiety and a generation lost with no way to productively resolve the contradictions of the moment.

What can you do to help those around you respond to this moment?

Photo Credit: Photo by Evan Wise on Unsplash

Comments